AI is having a moment and the chips that power this technology are at the center of a heated competition among tech giants. According to some experts market share is expected to rise to US$ 400 billion. Companies like Amazon are designing their own custom AI chips to meet the specific demands of AI workloads, while established chipmakers like Nvidia are fighting to maintain their market share.

Why AI Chips Are Different

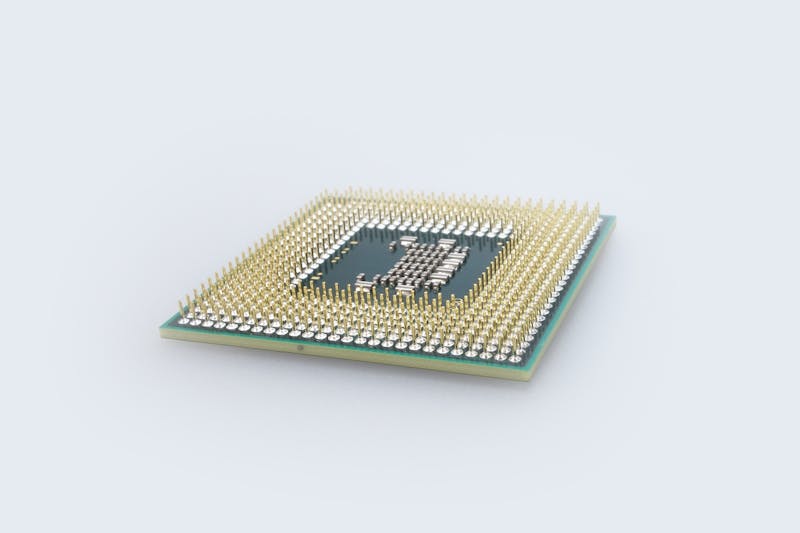

Traditional CPUs, like the one in your laptop, are powerful but have a limited number of cores. These cores process information sequentially, one calculation after another. This is inefficient for AI tasks, which often involve processing massive amounts of data in parallel. AI chips address this by having a larger number of smaller cores specifically designed for AI computations. These cores can work together simultaneously, significantly speeding up tasks like image recognition and natural language processing. These cores are smaller and typically do less than CPU cores but are specially designed for running AI calculations.

The Training vs. Inference Divide

There are two main types of AI chips: training chips and inference chips. Training chips are used to develop AI models by feeding them massive datasets. For instance, an AI model designed to recognise cats would be trained on millions of cat images. Training is usually done of tens of thousands of chips.

Inference chips, on the other hand, use the trained models to generate outputs. These chips are typically found in consumer-facing products like chatbots and image generators. While inference is typically done on 12 to 16 chips.

Processing all of that information demands a lot of energy, which generates heat. Chips are then tested on a variety of temperates to test their reliability on very low and very high temperatures. To help keep chips cool, they’re attached to heat sinks, pieces of metal with vents that help dissipate heat.

Amazon Challenges Nvidia

Amazon is a major player in the AI chip race with its Inferentia and Trainium lines. Inferentia chips are designed for inference, while Trainium tackles the compute-intensive task of training AI models. Amazon argues that custom chips allow them to optimize their cloud services for AI tasks, offering better performance and potentially lower costs compared to relying solely on Nvidia chips.

The Future of AI Chips

The AI chip market is still young, but experts believe it has vast potential. As AI technology continues to advance, so too will the need for faster, more efficient chips. This will likely lead to even fiercer competition among tech companies and chipmakers.

Looking ahead, the key question is how much cloud providers will rely on custom chips versus established players like Nvidia. This battle will be fought in corporate boardrooms around the world, but the outcome will impact the future of AI for everyone.